This World Press Freedom Day, 11 young climate reporters form the first ever Kenya Youth Multimedia Room to #StandwithPressFreedom amidst the current climate crisis. Composed of climate journalists, storytellers, content creators, filmmakers, entrepreneurs and radio presenters, the Youth Multimedia Room aims to cover Kenya’s national celebrations and amplify #WPFD2024’s call to protect freedom of environmental journalists.

This World Press Freedom Day, 11 young climate reporters form the first ever Kenya Youth Multimedia Room to #StandwithPressFreedom amidst the current climate crisis. Composed of climate journalists, storytellers, content creators, filmmakers, entrepreneurs and radio presenters, the Youth Multimedia Room aims to cover Kenya’s national celebrations and amplify #WPFD2024’s call to protect freedom of environmental journalists.

In Kenya, the celebrations take place at the Edge Convention Centre, organized by the Media Council of Kenya in close partnership with UNESCO.

In Kenya, the celebrations take place at the Edge Convention Centre, organized by the Media Council of Kenya in close partnership with UNESCO.

The youth reporters join us from all walks of life and all parts of Kenya. Zamzam Bonayo (centre) and her brother, Hussein Bonayo (left), have travelled 8 hours by bus from Marsabit, a county in Northern Kenya, to be part of this press movement for the planet. At their organisation, Northern Vision, Zamzam and her brother weave storytelling and climate activism to cultivate peace, resilience and hope in marginalized communities to aspire for a just and sustainable future.

The youth reporters join us from all walks of life and all parts of Kenya. Zamzam Bonayo (centre) and her brother, Hussein Bonayo (left), have travelled 8 hours by bus from Marsabit, a county in Northern Kenya, to be part of this press movement for the planet. At their organisation, Northern Vision, Zamzam and her brother weave storytelling and climate activism to cultivate peace, resilience and hope in marginalized communities to aspire for a just and sustainable future.

Linah Mbeyu Mohamed is a journalist with a cause: she wants to show that persons with disability are limitless when given an opportunity in society. As part of the Youth Multimedia Room, she keenly collects audio interviews and collaborates with other youth reporters to co-create quality coverage of the event and related issues.

Linah Mbeyu Mohamed is a journalist with a cause: she wants to show that persons with disability are limitless when given an opportunity in society. As part of the Youth Multimedia Room, she keenly collects audio interviews and collaborates with other youth reporters to co-create quality coverage of the event and related issues.

Next to her, Kamadi Amata from Mtaani Radio represents the community media fraternity as a Radio Editor and popular morning show host. Previously, Kamadi led a radio campaign to sensitive communities to the importance of building ‘Amani’ (peace) online under UNESCO’s Social Media 4 Peace project. Today, he is here to raise his community’s awareness on the importance of a free press to combatting climate mis- and disinformation.

Next to her, Kamadi Amata from Mtaani Radio represents the community media fraternity as a Radio Editor and popular morning show host. Previously, Kamadi led a radio campaign to sensitive communities to the importance of building ‘Amani’ (peace) online under UNESCO’s Social Media 4 Peace project. Today, he is here to raise his community’s awareness on the importance of a free press to combatting climate mis- and disinformation.

Members of the National Coalition on Freedom of Expression and Content Moderation in Kenya (FECoMo) are also present at the conference. Dr. Ruth Owino (Kabarak University) and Susan Wafula (National Council for Persons with Disability) call for the press to address and report on the climate crisis with intention and urgency.

Members of the National Coalition on Freedom of Expression and Content Moderation in Kenya (FECoMo) are also present at the conference. Dr. Ruth Owino (Kabarak University) and Susan Wafula (National Council for Persons with Disability) call for the press to address and report on the climate crisis with intention and urgency.

FECoMo’s capable advocacy leads are also on the ground as Youth Multimedia Room coordinators. Immaculate Onyango (National Steering Committee on Peacebuilding and Conflict Management) steers the Youth Multimedia’s Rooms operations. She hustles around all day, coordinating videography ad interview requests and making connections between Youth Reporters to foster content collaborations.

FECoMo’s capable advocacy leads are also on the ground as Youth Multimedia Room coordinators. Immaculate Onyango (National Steering Committee on Peacebuilding and Conflict Management) steers the Youth Multimedia’s Rooms operations. She hustles around all day, coordinating videography ad interview requests and making connections between Youth Reporters to foster content collaborations.

Viola Konji (Pwani Teknowgalz) (right) is another FECoMo associate co-coordinating the newsroom. While not posting messages and photos to FECoMo socials, she also looks after the well-being of the Youth Newsroom. Next to her, foreign policy analyst and medical practitioner Tevivona Ayien is exploring the nexus between health and the environment at this year’s climate-focused conference.

Viola Konji (Pwani Teknowgalz) (right) is another FECoMo associate co-coordinating the newsroom. While not posting messages and photos to FECoMo socials, she also looks after the well-being of the Youth Newsroom. Next to her, foreign policy analyst and medical practitioner Tevivona Ayien is exploring the nexus between health and the environment at this year’s climate-focused conference.

The Youth Multimedia Room reporters work around the clock to ensure strong coverage of #WPFD2024 messages. Nelson Juma (in blue) is at work collecting perspectives with a tripod and camera at hand. As a filmmaker with 8 years of directing, cinematography, producing, and editing under his belt, Nelson’s expertise supports the Youth Multimedia Room in ensuring that #PressFreedom narratives are captured and curated for a wider audience. Photographs featured in this photo journal essay are taken by Nelson.

The Youth Multimedia Room reporters work around the clock to ensure strong coverage of #WPFD2024 messages. Nelson Juma (in blue) is at work collecting perspectives with a tripod and camera at hand. As a filmmaker with 8 years of directing, cinematography, producing, and editing under his belt, Nelson’s expertise supports the Youth Multimedia Room in ensuring that #PressFreedom narratives are captured and curated for a wider audience. Photographs featured in this photo journal essay are taken by Nelson.

This inaugural Kenya Youth Multimedia Room mirrors a long-standing global initiative at the Global UNESCO World Press Freedom Day Conference. Each year, young journalists from across the world are identified to be a part of UNESCO Global Youth Newsroom (renamed ‘Multimedia Room’ to reflect today’s diverse media landscape), where they are trained and supported to produce engaging coverage on UNESCO’s WPFD Celebrations.

This inaugural Kenya Youth Multimedia Room mirrors a long-standing global initiative at the Global UNESCO World Press Freedom Day Conference. Each year, young journalists from across the world are identified to be a part of UNESCO Global Youth Newsroom (renamed ‘Multimedia Room’ to reflect today’s diverse media landscape), where they are trained and supported to produce engaging coverage on UNESCO’s WPFD Celebrations.

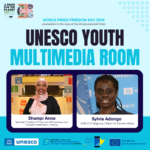

2024 also marks the first time that Kenya is represented at the global UNESCO Youth Multimedia Room. Attending the conference in Santiago, Chile are Shampi Ana (left) and Sylvia Adongo (2nd from right), who join the UNESCO Youth Multimedia Room as representatives from Kenya’s Social Media 4 Peace project.

2024 also marks the first time that Kenya is represented at the global UNESCO Youth Multimedia Room. Attending the conference in Santiago, Chile are Shampi Ana (left) and Sylvia Adongo (2nd from right), who join the UNESCO Youth Multimedia Room as representatives from Kenya’s Social Media 4 Peace project.

Amidst the current information and climate crisis, the UNESCO Youth Multimedia Rooms—both in Kenya and globally—reflect the energy, creativity, and collaborative spirit that young people bring to combat the climate crisis in a digital age.

- Immaculate Onyango & Ibrahim Lutta – If there is no press freedom (Reel)

- Boniface Harrison – The Essence of A Free Press for the Planet (Article)

- Wesley Langat – Free Press is Crucial for Tackling Climate Change Crisis (Article)

- Shelmith Wambui – World Press Freedom Day 2024 (Article)

- Linah Mbeyu Mohamed – Disability World on Floods (Article)

- Kamadi Amata – Uanahabari wa Maudhui (Kiswahili Radio Programme)

- Tevivona Ayien – A Long Walk to Press Freedom (Article)

- Zamzam Bonaya – Voices from WPFD2024 (Social Media)

- Zamzam Bonaya and Hussein Boru – WPFD 2024 (Short Reel)

- Viola Konji – Kenya Youth Multimedia Room 2024 (Report)

Learn more about the youth climate media champions of Kenya’s first Youth Multimedia Room below:

Ruth uses LinkedIn to share insights into her work as a Network Engineer in Tanda Community Network and build a community of like-minded professionals.

Ruth uses LinkedIn to share insights into her work as a Network Engineer in Tanda Community Network and build a community of like-minded professionals. Denis at the close of the “Influence for Impact” workshop. He is a 23-year-old community mobiliser and human rights advocate.

Denis at the close of the “Influence for Impact” workshop. He is a 23-year-old community mobiliser and human rights advocate. Angela Minayo, a lawyer and Digital Rights and Policy Programmes Officer from ARTICLE 19 Eastern Africa and FECoMo Kenya, conducts a session on the interplay between content moderation and human rights.

Angela Minayo, a lawyer and Digital Rights and Policy Programmes Officer from ARTICLE 19 Eastern Africa and FECoMo Kenya, conducts a session on the interplay between content moderation and human rights.